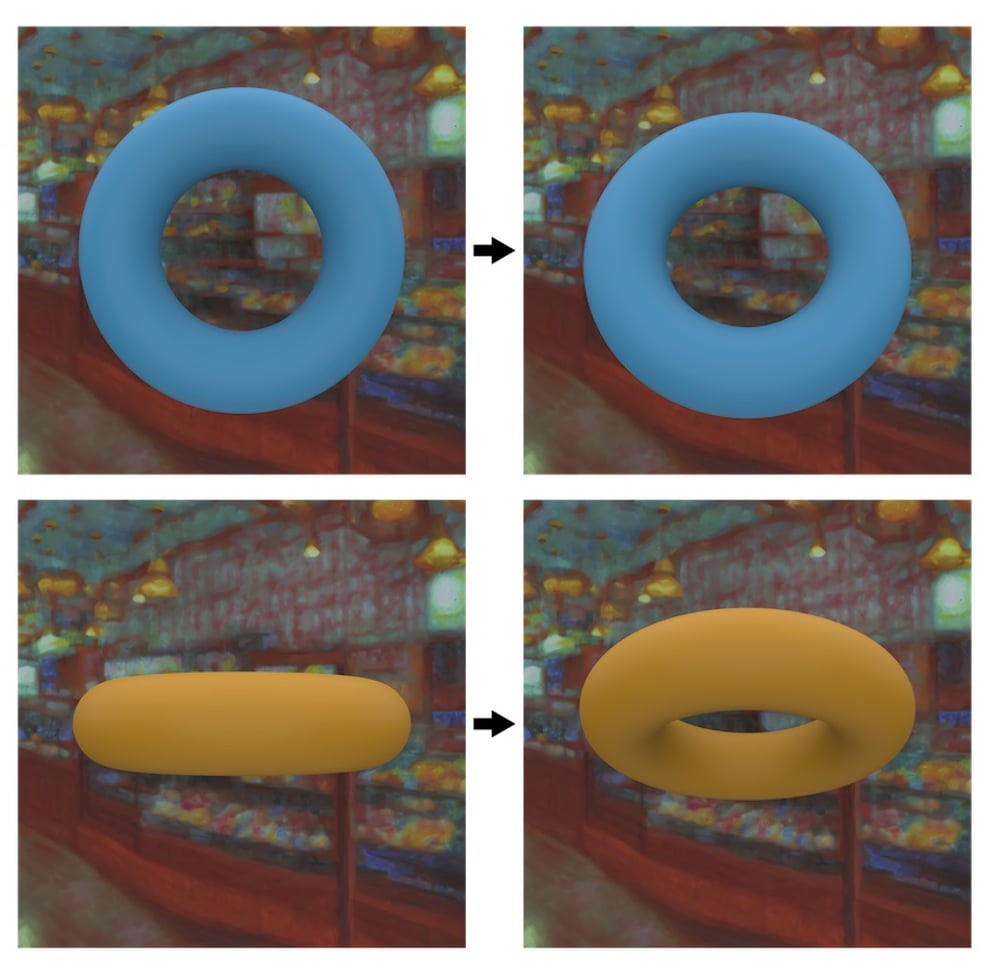

Look at the pairs of donuts: it probably looks as though the top, blue donuts are separated by a smaller rotational distance than the bottom, yellow donuts.

Actually, the top and bottom donut pairs are separated by exactly the same angular (rotational) distance, but we perceive the distances to be quite different. This is because our perception of objects can change, depending on the viewpoint we’re looking at them from.

2D mental rotation?

We humans have the remarkable ability to imagine things in our “mind’s eye,” and even to rotate and manipulate the imagined objects almost as if they were real. But the detailed workings of this rich inner world remain quite mysterious. In a recent study published in Current Biology, we uncover a new insight into the mechanisms underlying our visual imagination abilities.

When you think about rotating an object in your mind, you might have the impression that you are performing a literal physical rotation. Indeed, one of the most significant landmarks in experimental psychology was the discovery of mental rotation, and for decades we have assumed that when we rotate objects in our mind, we simulate an exact mental analogy of the physical rotation that would occur in the real world. However, the mechanisms in the brain that allow us to have such good mental rotation abilities are poorly understood.

Luckily however, humans actually make small but systematic mental rotation errors all the time: if you hold up your smartphone so that the screen is facing toward you, and rotate it slightly, you probably won’t notice much difference. If you hold the phone up with the bottom edge facing you, and rotate it by the same amount, you will notice much more of a difference. We wanted to use perceptual errors like these to understand how the brain actually achieves mental rotation.

To test this, volunteers completed an experiment where they looked at pairs of computer-generated objects on a computer screen and were asked to report which ones appeared to have more similar orientations. This showed that observers interpret some pairs of objects as rotated by different angles, even though the physical rotation was always the same.

To gain insights into the brain mechanisms that cause these errors, we needed a computational model of the underlying mental operations.

When something moves in front of our eyes, the brain computes the path that it has taken in 2D coordinates, a process scientists refer to as ‘optical flow’. The Giessen researchers created a model that simulates the 2D optical flow that would arise as the object rotates, and compared the simulations to the human data.

The results were striking. The model predicted the specific pattern of human errors with incredible accuracy.

These results suggest that when we do mental rotation, we actually simulate a 2D rendering of the 3D information, rather than rotating objects in our minds like we do in the physical world. The findings suggest that our inner world may be a mere flattened rendering of reality, much like a video game is a 2D depiction of a 3D world.

The long-standing assumption that mental rotation is an exact analogy to physical rotation has influenced not only how we think about humans’ capacity for mental imagery, but also about human intelligence, spatial reasoning and navigation. These findings provide a new perspective on these processes, with implications for how the brain learns to see in the first place by comparing what is imagined with what is observed when we interact with the world.